InstantStyle.

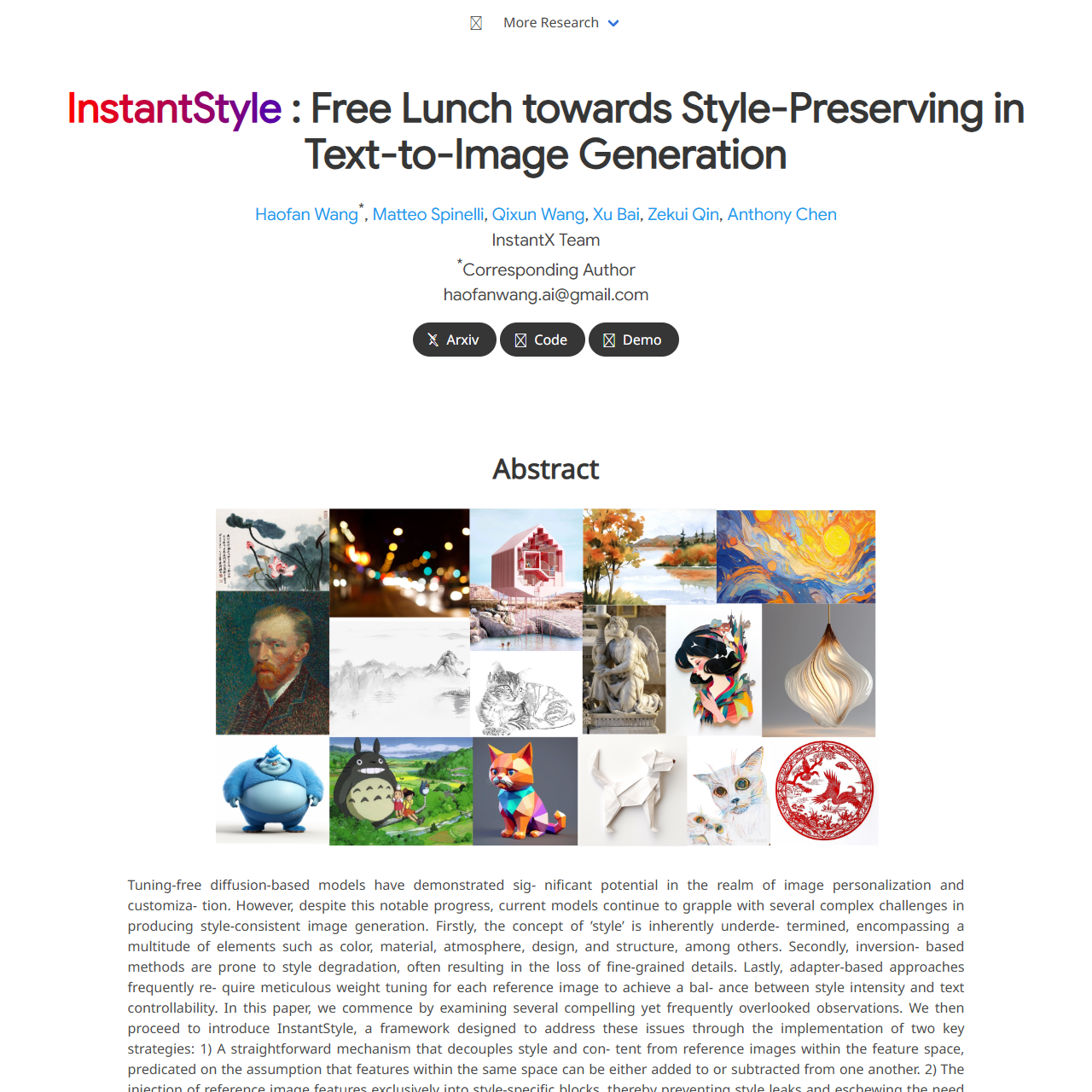

Tuning-free diffusion-based models have demonstrated sig- nificant potential in the realm of image personalization and customiza- tion. However, despite this notable progress, current models continue to grapple with several complex challenges in producing style-consistent image generation. Firstly, the concept of ’style’ is inherently underde- termined, encompassing a multitude of elements such as color, material, atmosphere, design, and structure, among others. Secondly, inversion- based methods are prone to style degradation, often resulting in the loss of fine-grained details. Lastly, adapter-based approaches frequently re- quire meticulous weight tuning for each reference image to achieve a bal- ance between style intensity and text controllability. In this paper, we commence by examining several compelling yet frequently overlooked observations. We then proceed to introduce InstantStyle, a framework designed to address these issues through the implementation of two key strategies: 1) A straightforward mechanism that decouples style and con- tent from reference images within the feature space, predicated on the assumption that features within the same space can be either added to or subtracted from one another. 2) The injection of reference image features exclusively into style-specific blocks, thereby preventing style leaks and eschewing the need for cumbersome weight tuning, which often charac- terizes more parameter-heavy designs.Our work demonstrates superior visual stylization outcomes, striking an optimal balance between the in- tensity of style and the controllability of textual elements.

Injecting into Style Blocks Only. Empirically, each layer of a deep network captures different semantic information the key observation in our work is that there exists two specific attention layers handling style. Specifically, we find up blocks.0.attentions.1 and down blocks.2.attentions.1 capture style (color, material, atmosphere) and spatial layout (structure, composition) respectively. We can use them to implicitly extract style information, further preventing content leakage without losing the strength of the style. The idea is straightforward, as we have located style blocks, we can inject our image features into these blocks only to achieve style transfer seamlessly. Furthermore, since the number of parameters of the adapter is greatly reduced, the text control ability is also enhanced. This mechanism is applicable to other attention-based feature injection for editing or other tasks.

数据统计

数据评估

关于InstantStyle特别声明

本站鸟瑞导航提供的InstantStyle数据都来源于网络,不保证外部链接的准确性和完整性,同时,对于该外部链接的指向,不由鸟瑞导航实际控制,在2025年9月10日 下午7:02收录时,该网页上的内容,都属于合法合规,后期网页的内容如出现违规,请联系本站网站管理员进行举报,我们将进行删除,鸟瑞导航不承担任何责任。

相关导航

Fast3D is the leading AI-powered 3D model generator. Create high-quality 3D models from text or images in seconds.

DeepMotion – AI Motion Capture & Body Tracking

Generate 3D animations from vi...

图星人AI生图

免费AI商用图片库,100万+AI创意图片可直接下载商用或AI生成同款图片。1000+设计师最常用的、最新颖的AI图片风格模型,可一键生成类似风格图片。不会写生图指令,不会调模型参数,也能快速上手生成各种最新风格图片素材

Raphael AI

Raphael AI, the world's first completely free and unlimited AI Image Generator powered by FLUX.1-Dev model. No registration required, superior image quality.

Arthub ai

Arthub.ai is a creative community for showcasing, discovering and creating AI generated art.

Depth Anything

Depth Anything

Civitai社区

Explore thousands of high-quality Stable Diffusion & Flux models, share your AI-generated art, and engage with a vibrant community of creators

大设AI

大设网(原AI大作)是基于Stable Diffusion的免费ai绘画网站,为ai作画爱好者提供一键生成高清精绘大图、sdxl模型保姆级教程、AI提示词工具。在大设ai人工智能绘画平台随意发挥自己的绘画创意。

暂无评论...